Import from a Database

Storing sensitive data in plain text format in a database poses a significant risk to both users and organizations. In the event of a security breach this data could be accessed and exploited, potentially resulting in identity theft, financial loss, or other serious consequences. Hackers and malicious actors are constantly on the lookout for vulnerabilities in databases containing sensitive data, making it crucial for developers to take steps to secure this information.

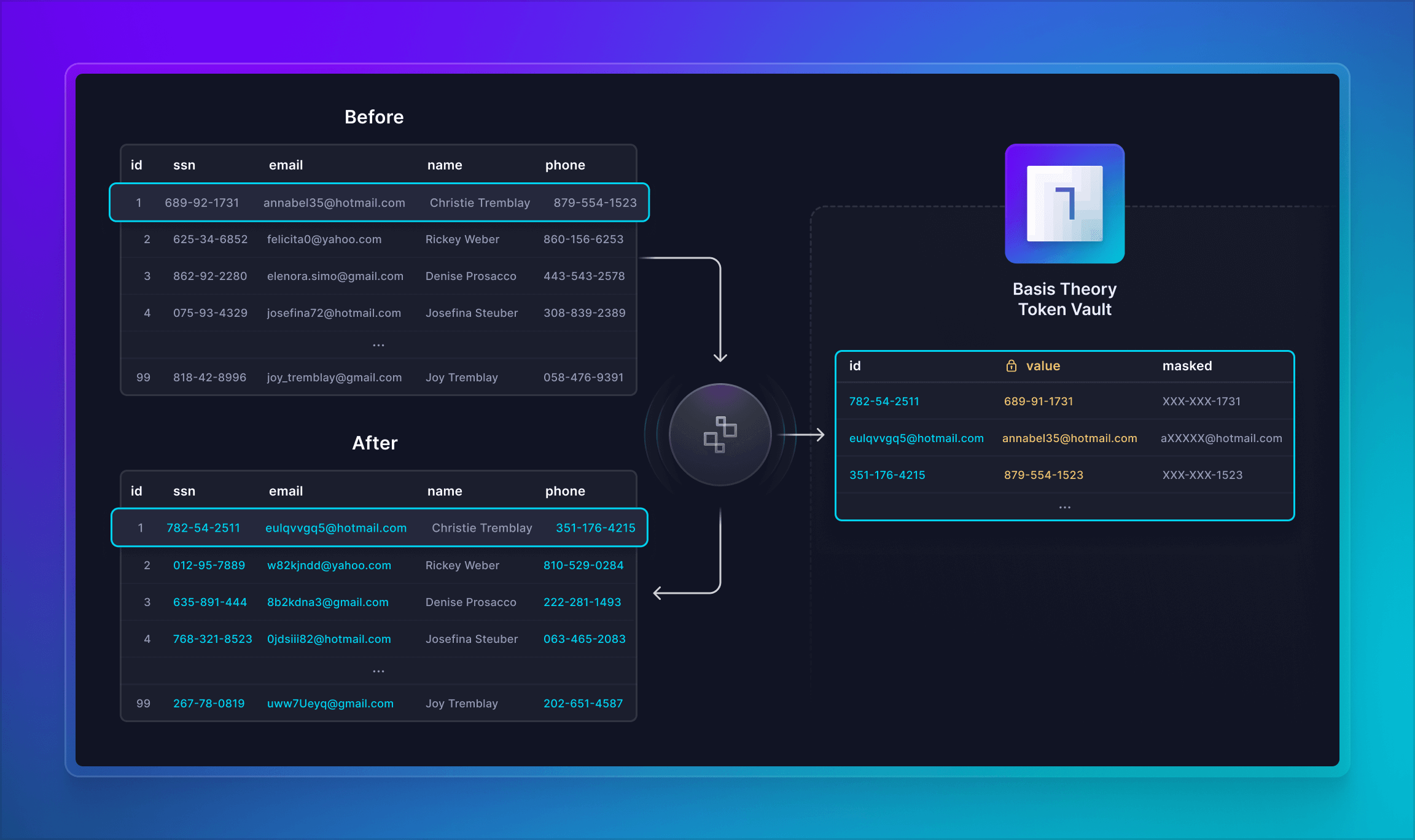

This guide explores how to perform API-based migration of Personal Identifiable Information (PII) from an existing database into the Basis Theory platform.

We also support batch file processing for larger datasets or cases where an API-based migration isn't suitable. If you need help with your migration plan - please reach out.

Setup

In this section, we will initialize and seed a database using the stack below:

- PostgreSQL for the relational database;

- Prisma for the ORM layer;

- TypeScript programming language for the migration script;

Initialize a new project inside a blank folder using your favorite package manager:

- npm

- yarn

npm init

yarn init

Follow the CLI instructions to create a new package.

Install Dependencies

Install the development dependencies within your application:

- npm

- yarn

npm install --save-dev @types/node prisma typescript

yarn add -D @types/node prisma typescript

Now, install runtime dependencies:

- npm

- yarn

npm install --save @basis-theory/basis-theory-js @prisma/client ts-node @faker-js/faker

yarn add @basis-theory/basis-theory-js @prisma/client ts-node @faker-js/faker

Prepare Environment

If you don't have a PostgreSQL database running yet, you can start it using Docker, by running the following command:

docker run --name postgres -e POSTGRES_PASSWORD=basistheory! -p 5432:5432 -d postgres

Create a .env file and add your database connection url to it:

DATABASE_URL="postgresql://postgres:basistheory!@localhost:5432/database?schema=public"

To securely import data via Server-to-Server, you'll need a Private Application to grant you an API Key used to authenticate against Basis Theory services. Click here to create one.

This will create an application with the token:create permission. Copy the API Key to your .env file:

DATABASE_URL="postgresql://username:password@localhost:5432/database?schema=public"

BASIS_THEORY_API_KEY=<API_KEY>

Initialize Database

Initialize Prisma by running:

- npm

- yarn

npx prisma init --datasource-provider postgresql

yarn prisma init --datasource-provider postgresql

Add the Person model to the prisma/schema.prisma file:

generator client {

provider = "prisma-client-js"

}

datasource db {

provider = "postgresql"

url = env("DATABASE_URL")

}

model Person {

id Int @id @default(autoincrement())

ssn String @unique

email String @unique

name String

phoneNumber String

birthDate String

}

Instruct Prisma to create a migration file and apply it to the database schema, which creates a Person table:

- npm

- yarn

npx prisma migrate dev --name init

yarn prisma migrate dev --name init

Let's seed our database with fake sensitive data. For that, we can use Faker JS to populate the Person table.

Create a new file prisma/seed.ts with the following contents:

import { PrismaClient } from "@prisma/client";

import { faker } from "@faker-js/faker";

const prisma = new PrismaClient();

async function main() {

const records = await prisma.person.createMany({

data: Array.apply(null, Array(100)).map((_, i) => ({

id: i,

ssn: faker.helpers.regexpStyleStringParse(

"[0-9][0-9][0-9]-[0-9][0-9]-[0-9][0-9][0-9][0-9]"

),

email: faker.internet.email().toLowerCase(),

name: faker.name.fullName(),

phoneNumber: faker.phone.number("###-###-####"),

birthDate: faker.date.birthdate().toISOString(),

})),

});

console.log(`${records.count} "Person" records created successfully.`);

}

main()

.then(async () => {

await prisma.$disconnect();

})

.catch(async (e) => {

console.error(e);

await prisma.$disconnect();

process.exit(1);

});

Add the prisma.seed field to your package.json file:

{

"name": "my-project",

"version": "1.0.0",

"prisma": {

"seed": "ts-node prisma/seed.ts"

},

...

}

Run the following command to create the Person records in the database:

- npm

- yarn

npx prisma db seed

yarn prisma db seed

Migrate Data

In this section, we will import existing sensitive data from our database into Basis Theory platform by calling the Tokenize API. The elected data points are ssn, email and phoneNumber, which after being migrated, will be replaced in the database with synthetic equivalents, aka Token IDs, by employing different aliasing techniques. Additionally, we will also customize the masking of the data points for securely revealing the data partially to our systems. For example:

| Field | Value | Token Id (alias) | Masked Data |

|---|---|---|---|

ssn | 689-92-1731 | 782-54-2511 | XXX-XX-1731 |

email | annabel35@hotmail.com | eulqvvgq5@hotmail.com | aXXXXX@hotmail.com |

phoneNumber | 879-554-1523 | 351-176-4215 | XXX-XXX-1523 |

Create a migrate.ts file at the root of your project and add a iteratePersonTable function. We will use Prisma's cursor-based pagination to walk through the Person table records and tokenize them in chunks of 10:

import { PrismaClient, Person } from "@prisma/client";

const prisma = new PrismaClient();

const chunkSize = 10;

async function iteratePersonTable(cursor?: number): Promise<Person[]> {

return prisma.person.findMany({

take: chunkSize,

orderBy: {

id: "asc",

},

...(cursor

? {

skip: 1,

cursor: {

id: cursor,

},

}

: {}),

});

}

The function above receives an id-based cursor and returns a page (or chunk) containing 10 Person records starting off the cursor position. If no cursor is informed, then it returns the first page.

Add functions to create token payloads, specifying expected aliasing and masking patterns:

import { PrismaClient, Person } from "@prisma/client";

const prisma = new PrismaClient();

const chunkSize = 10;

async function iteratePersonTable(cursor?: number): Promise<Person[]> { ... }

function createSsnTokenPayload(data: string) {

return {

type: "social_security_number",

id: "{{ data | alias_preserve_format }}",

data,

containers: ["/pii/high/"],

privacy: {

classification: "pii",

impact_level: "high",

restriction_policy: "mask",

},

};

}

function createEmailTokenPayload(data: string) {

return {

type: "token",

id: '{{ data | split: "@" | first | alias_preserve_length }}@{{ data | split: "@" | last }}',

data,

mask: '{{ data | slice: 0 }}XXXXX@{{ data | split: "@" | last }}',

containers: ["/pii/high/"],

privacy: {

classification: "pii",

impact_level: "high",

restriction_policy: "mask",

},

};

}

function createPhoneNumberTokenPayload(data: string) {

return {

type: "token",

id: "{{ data | alias_preserve_format }}",

data,

mask: "{{ data | reveal_last: 4 }}",

containers: ["/pii/high/"],

privacy: {

classification: "pii",

impact_level: "high",

restriction_policy: "mask",

},

};

}

Now let's add our main migrate function and call it. After it is completed or errors out, we close the database connection:

import { PrismaClient, Person } from "@prisma/client";

const prisma = new PrismaClient();

const chunkSize = 10;

async function iteratePersonTable(cursor?: number): Promise<Person[]> {...}

function createSsnTokenPayload(data: string) {...}

function createEmailTokenPayload(data: string) {...}

function createPhoneNumberTokenPayload(data: string) {...}

async function migrate() {

// TODO

}

migrate()

.then(async () => {

await prisma.$disconnect();

})

.catch(async (e: any) => {

console.error(e?.data?.errors || e);

await prisma.$disconnect();

process.exit(1);

});

And finally, let's iterate through the data chunks and create a token per each sensitive data field we want to secure. After tokenization is completed, we will update the database records with the token IDs.

import { PrismaClient, Person } from "@prisma/client";

import { BasisTheory } from "@basis-theory/basis-theory-js";

const prisma = new PrismaClient();

const chunkSize = 10;

async function iteratePersonTable(cursor?: number): Promise<Person[]> {...}

function createSsnTokenPayload(data: string) {...}

function createEmailTokenPayload(data: string) {...}

function createPhoneNumberTokenPayload(data: string) {...}

async function migrate() {

process.stdout.write("Starting migration...\n");

const bt = await new BasisTheory().init(process.env.BASIS_THEORY_API_KEY);

let cursor = undefined;

do {

const chunk: Person[] = await iteratePersonTable(cursor);

cursor = chunk[chunkSize - 1]?.id;

if (chunk.length > 0) {

process.stdout.write(

`Tokenizing ${chunk.length} records from ${chunk[0].id} to ${

chunk[chunk.length - 1].id

}... `

);

const tokens = await bt.tokenize(

chunk.map((p) => ({

ssn: createSsnTokenPayload(p.ssn),

email: createEmailTokenPayload(p.email),

phoneNumber: createPhoneNumberTokenPayload(p.phoneNumber),

}))

);

process.stdout.write(`Done.\nUpdating DB... `);

const updates = chunk.map((person, i) => {

const token = (tokens as any[])[i];

return prisma.person.update({

where: {

id: person.id,

},

data: {

ssn: token.ssn.id,

email: token.email.id,

phoneNumber: token.phoneNumber.id,

},

});

});

await Promise.all(updates);

process.stdout.write(`Done.\n`);

}

} while (cursor !== undefined);

}

migrate()

.then(async () => {

await prisma.$disconnect();

})

.catch(async (e: any) => {

console.error(e?.data?.errors || e);

await prisma.$disconnect();

process.exit(1);

});

Add a start script to the package.json file to run the migrate.ts script file:

{

"name": "my-project",

"version": "1.0.0",

"scripts": {

"start": "ts-node migrate.ts"

},

"prisma": {

"seed": "ts-node prisma/seed.ts"

},

...

}

And run the migration with the following command:

- npm

- yarn

npm start

yarn start

🎉 Success! We migrated our PII from a plain text format to synthetic anonymous references, without having to change any of our existing schema.

Conclusion

By following the step-by-step instructions provided, developers can effectively ensure the security and privacy of sensitive user information while maintaining its usability under the scope of a database. Tokenization provides an effective way to protect user data by replacing identifiable information with randomly generated tokens that do not reveal any personal information. By utilizing this method, organizations can ensure that sensitive user data is protected from unauthorized access and breaches, while still allowing for effective analysis and processing of the data.

You can find some useful links below on how to interact with the data we just secured.